Balena Device Debugging Masterclass

Prerequisite Classes

This masterclass builds upon knowledge that has been taught in previous classes. To gain the most from this masterclass, we recommend that you first undertake the following masterclasses:

Introduction

At balena, we believe the best people to support a customer are the engineers who build the product. They have the depth and breadth of knowledge that can quickly identify and track down issues that traditional support agents usually do not. Not only does this help a customer quickly and efficiently solve most issues, but it also immerses balena engineers in sections of the product they might not otherwise encounter in their usual working life, which further improves the support each engineer can offer. This masterclass has been written as an initial guide for new engineers about to start support duties.

Whilst the majority of devices never see an issue, occasionally a customer will contact balena support with a query where one of their devices is exhibiting anomalous behavior.

Obviously, no guide can cover the range of queries that may occur, but it can give an insight into how to tackle problems and the most common problems that a balena support agent sees, as well as some potential solutions to these problems. In compiling this document, a group of highly seasoned balena engineers discussed their techniques for discovering and resolving on-device issues, as well as techniques for determining how best to mitigate an issue being exhibited.

In this masterclass, you will learn how to:

- Gain access to a customer device, when permission has been granted

- Retrieve initial diagnostics for the device

- Identify and solve common networking problems

- Work with the Supervisor

- Work with balenaEngine

- Examine the Kernel logs

- Understand media-based issues (such as SD card corruption)

- Understand how heartbeat and the VPN only status affects your devices

Whilst this masterclass is intended for new engineers about to start support duties at balena, it is also intended to act as an item of interest to customers who wish to know more about how we initially go about debugging a device (and includes information that customers themselves could use to give a support agent more information). We recommend, however, ensuring balena support is always contacted should you have an issue with a device that is not working correctly.

Note: The balena VPN service was renamed to cloudlink in 2022 in customer facing documentation.

Hardware and Software Requirements

It is assumed that the reader has access to the following:

- A local copy of this repository Balena Device Debugging Masterclass. This copy can be obtained by either method:

git clone https://github.com/balena-io-projects/debugging-masterclass.git- Download ZIP file (from 'Clone or download'->'Download ZIP') and then unzip it to a suitable directory

- A balena supported device, such as a balenaFin 1.1, Raspberry Pi 3 or Intel NUC. If you don't have a device, you can emulate an Intel NUC by installing VirtualBox and following this guide

- A suitable shell environment for command execution (such as

bash) - A balenaCloud account

- A familiarity with Dockerfiles

- An installed instance of the balena CLI

Exercises

The following exercises assume access to a device that has been provisioned. As per the other masterclasses in this series we're going to assume that's a Raspberry Pi 4, however you can simply alter the device type as appropriate in the following instructions. The balena CLI is going to be used instead of the WebTerminal in the balenaCloud Dashboard for accessing the device, but all of the exercises could be completed using the WebTerminal if preferred.

First login to your balena account via balena login, and then create a new

fleet:

$ balena fleet create DebugFleet --type raspberrypi4-64 --organization ryanh

Fleet created: slug "ryanh/debugfleet", device type "raspberrypi4-64"Now provision a device by downloading and flashing a development image from the Dashboard (via Etcher), or by flashing via the command line.

$ balena os download raspberrypi4-64 --version "2022.7.0.dev" --output balena-debug.img

Getting device operating system for raspberrypi4-64

balenaOS image version 2022.7.0 downloaded successfullyNote: Above, we used a balenaOS Extended Support Release (ESR). These ESRs are currently available for many device types, but only on paid plans and balena team member accounts. If you are going through this masterclass on a free plan, just pick the latest release available and the remainder of the guide is still applicable.

Carry out any configuration generation required, should you be using a Wifi AP and inject the configuration into the image (see balena CLI Advanced Masterclass for more details), or use a configuration for an ethernet connection:

$ balena os configure balena-debug.img --fleet DebugFleet --config-network=ethernet

Configuring operating system image

$ balena util available-drives

DEVICE SIZE DESCRIPTION

/dev/disk2 31.9 GB TS-RDF5 SD Transcend Media

$ balena os initialize balena-debug.img --type raspberrypi4-64 --drive /dev/disk2 --yes

Initializing device

Note: Initializing the device may ask for administrative permissions

because we need to access the raw devices directly.

Going to erase /dev/disk2.

Admin privileges required: you may be asked for your computer password to continue.

Writing Device OS [========================] 100% eta 0s

Validating Device OS [========================] 100% eta 0s

You can safely remove /dev/disk2 nowYou should now have a device that will appear as part of the DebugFleet fleet:

$ balena devices | grep debugfleet

7830516 9294512 average-fire raspberrypi4-64 debugfleet Idle true 14.0.8 balenaOS 2022.7.0 https://dashboard.balena-cloud.com/devices/92945128a17b352b155c2ae799791389/summaryFor convenience, export a variable to point to the root of this masterclass repository, as we'll use this for the rest of the exercises, eg:

$ export BALENA_DEBUGGING_MASTERCLASS=~/debugging-masterclassFinally, push the code in the multicontainer-app directory to the fleet:

$ cd $BALENA_DEBUGGING_MASTERCLASS/multicontainer-app

$ balena push DebugFleet1. Accessing a User Device

Any device owned by you will automatically allow access via either the WebTerminal (in the device's Dashboard page), or the balena CLI via balena ssh <uuid>. However, for a support agent to gain access to a device that isn't owned by them, you would need to grant access for it explicitly.

1.1 Granting Support Access to a Support Agent

It is possible to grant support access to a device, all devices in a fleet or to a block to enable support and device troubleshooting by balena employees.

Access is granted for a set, user-defined period, and access may be revoked at any time. Access for support agents is limited, which includes variables and configurations (both fleet and device), the ability to reboot the device, apply balenaOS upgrades, pin releases, etc. This is not a comprehensive list and may be subject to further changes.

This ensures that a device under investigation cannot be unnecessarily altered or modified. Support investigations are intended as an avenue of exploration and research for ensuring that issues are categorized to allow improvements to the product surface such that these issues are eliminated.

Once support access has been granted, a support agent will be able to use the UUID of a device to gain access to it. Support access is enabled via SSH over cloudlink, so the device must be online and connected to cloudlink. Alternatively, it may be possible to access a problematic device from a gateway device operating on the same network.

Note: It is possible to disable support access functionality by removing the balena SSH public key from the device. However, this will render the device inaccessible remotely for the purposes of support and updates to the host OS. For more details see our security documentation.

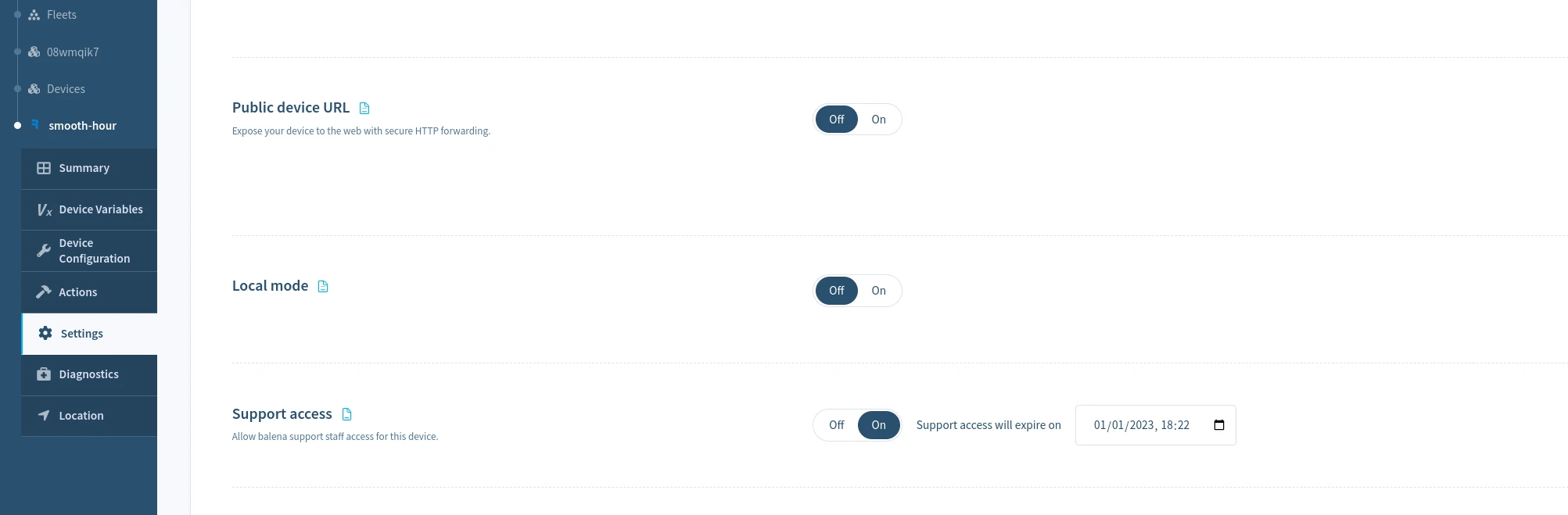

Grant support access for a device

To enable support access for a single device, select the Actions menu in the Device dashboard, and choose the Grant Support Access button and choose the period to grant device access. You may revoke access at any time by selecting Revoke Support Access on the same page.

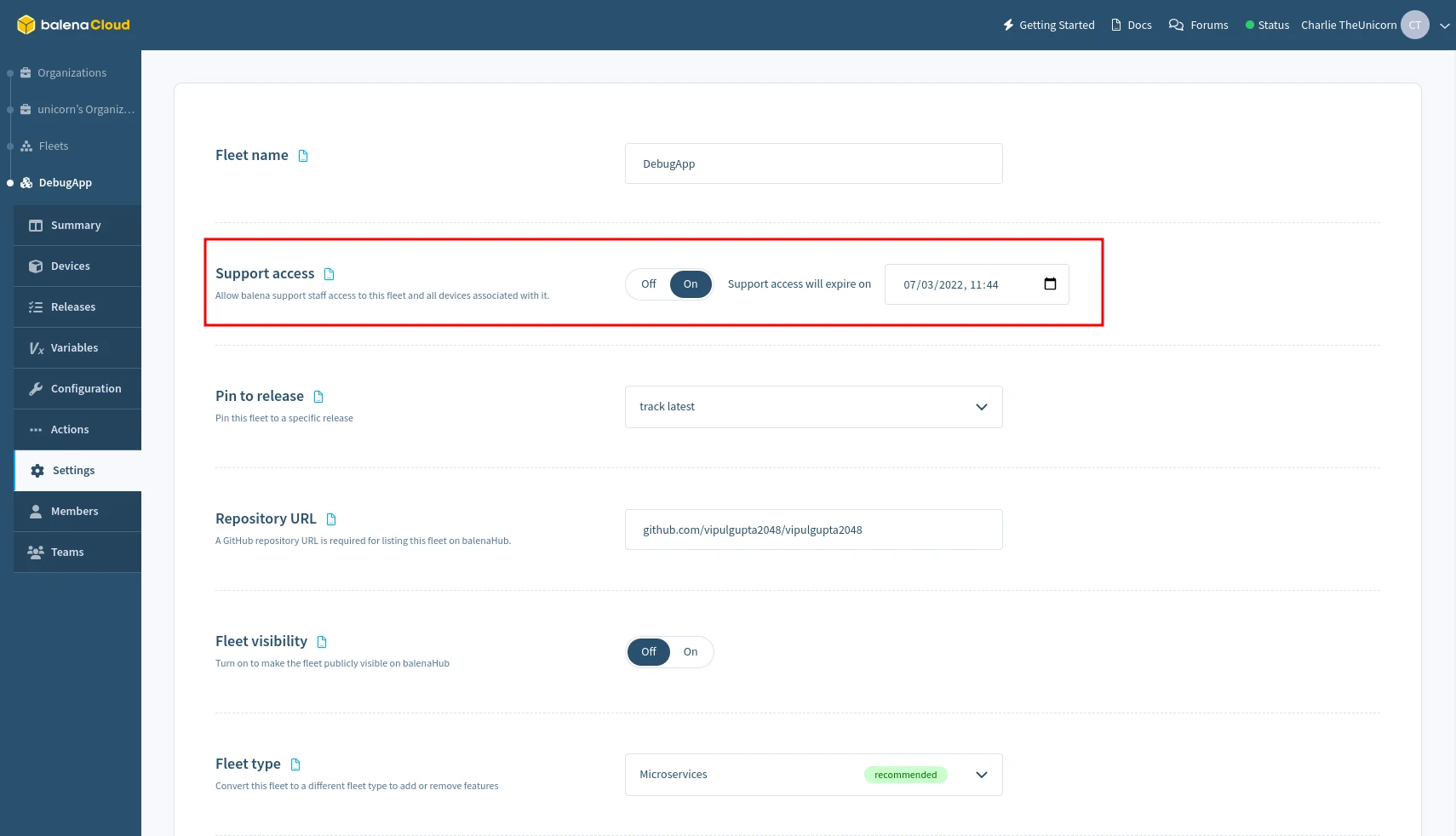

Grant support access for a fleet

To enable support access for all devices in a fleet, select the Grant Support Access from the Settings menu of the Fleet dashboard, and choose the period to grant access. This may be revoked at any time by selecting Revoke Support Access on the same page.

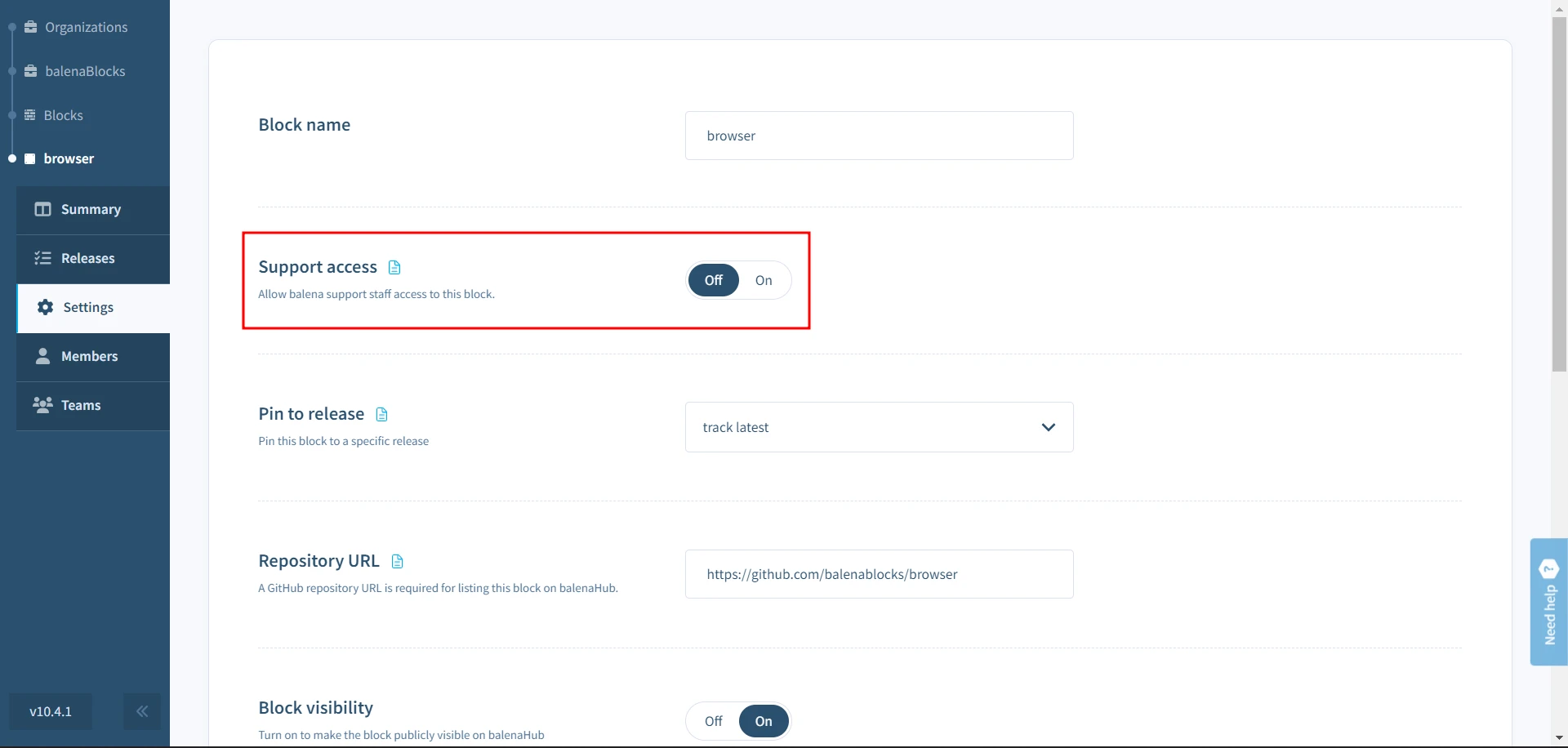

Grant support access for a block

To enable support access for block, select the Grant Support Access from the Settings menu of the block dashboard, and choose the period to grant access. This may be revoked at any time by selecting Revoke Support Access on the same page.

Grant support access using the CLI

To grant or revoke support access for devices or fleets, use the balena support <action> command. Refer to CLI Support access docs for more information.

2. Initial Diagnosis

The balenaCloud Dashboard includes the ability to run diagnostics on a device to determine its current condition. This should be the first step in attempting to diagnose an issue without having to actually access the device via SSH. Ensuring diagnostics and health checks are examined first ensures that you have a good idea of the state a device is in before SSHing into it, as well as ensuring that the information can be accessed later if required (should a device be in a catastrophic state). This helps significantly in a support post-mortem should one be required.

2.1 Device Health Checks

To run health checks through balenaCloud dashboard, head to the Diagnostics tab in the sidebar and click the Run checks button to start the tests.

This will trigger a set of health checks to run on the device. You should see all the checks as Succeeded in the Success column if the device is healthy and there are no obvious faults.

That's no fun, let's create a fault.

SSH into your device, via balena ssh <UUID>, using the appropriate UUID. We want to

SSH into the host OS, as that's where we'll wreak havoc:

$ balena ssh 9294512

=============================================================

Welcome to balenaOS

=============================================================

root@9294512:~#We're going to do a couple of things that will show up as problems. Something you'll often check, and that we'll discuss later, is the state of the balena Supervisor and balenaEngine.

First of all, we're going to kill the balenaEngine maliciously without letting it shut down properly:

root@9294512:~# ps aux | awk '!/awk/ && /balenad/ {print $2}' | xargs kill -9What this does is list the processes running, look for the balenad executable

(the balenaEngine itself) and then stop the engine with a SIGKILL signal,

which will make it immediately terminate instead of shutting down correctly.

In fact, we'll do it twice. Once you've waited about 30 seconds, run the command

again.

Now if you run the health checks again. After a couple minutes, you'll see the 'check_container_engine` section has changed:

| Check | Status | Notes |

|---|---|---|

| check_container_engine | Failed | Some container_engine issues detected: |

| test_container_engine_running_now Container engine balena is NOT running | ||

| test_container_engine_restarts Container engine balena has 2 restarts and may be crashlooping (most recent start time: Thu 2022-08-18 11:14:32 UTC) | ||

| test_container_engine_responding Error querying container engine: |

Unclean restarts usually mean that the engine crashed abnormally with an issue.

This usually happens when something catastrophic occurs between the Supervisor

and balenaEngine or corruption occurs in the image/container/volume store.

Let's take a look at the journal for balenaEngine (balena.service) on the

device:

root@9294512:~# journalctl --no-pager -n 400 -u balena.serviceYou'll see a lot of output, as the logs don't just show the balenaEngine output but the output from the Supervisor as well. However, if you search through the output, you'll see a line like the following:

Aug 18 11:14:32 9294512 systemd[1]: balena.service: Main process exited, code=killed, status=9/KILLAs you can see, the balena.service was killed with a SIGKILL instruction.

You can also see the two times that our services were attempted to start after the engine was killed and restarted automatically by running:

root@7db55ce:~# journalctl --no-pager -n 400 -u balena.service | grep frontend -A 5

...

Aug 18 11:15:05 9294512 89fe7a71a40d[6061]: > [email protected] start /usr/src/app

Aug 18 11:15:05 9294512 89fe7a71a40d[6061]: > node index.js

Aug 18 11:15:05 9294512 89fe7a71a40d[6061]:

Aug 18 11:15:06 9294512 422820756f15[6061]:

Aug 18 11:15:06 9294512 422820756f15[6061]: > [email protected] start /usr/src/app

Aug 18 11:15:06 9294512 422820756f15[6061]: > node index.jsAs you can see, these have now been specifically output for the two running service containers.

If you only want to see balenaEngine output and not from any of the service

containers it is running, use journalctl -u balena.service -t balenad. The

-t is the shortened form of --identifier=<id>, which in this case ensures

that only messages from the balenad syslog identifier are shown.

We'll discuss issues with balenaEngine and the Supervisor later in this masterclass.

There are many other health checks that can immediately expose a problem. For example, warnings on low free memory or disk space can expose problems which will exhibit themselves as release updates failing to download, or service containers restarting abnormally (especially if a service runs unchecked and consumes memory until none is left). We'll also go through some of these scenarios later in this masterclass.

Checkout the Diagnostics page for more information on tests you can run on the device.

3. Device Access Responsibilities

When accessing a customer's device you have a number of responsibilities, both technically and ethically. A customer assumes that the support agent has a level of understanding and competence, and as such support agents should ensure that these levels are met successfully.

There are some key points which should be followed to ensure that we are never destructive when supporting a customer:

- Always ask permission before carrying out non-read actions. This includes situations such as stopping/restarting/starting services which are otherwise functional (such as the Supervisor). This is especially important in cases where this would stop otherwise functioning services (such as stopping balenaEngine).

- Ensure that the customer is appraised of any non-trivial non-read actions that you are going to take before you carry those actions out on-device. If they have given you permission to do 'whatever it takes' to get the device running again, you should still pre-empt your actions by communicating this clearly.

- During the course of carrying out non-read actions on a device, should the customer be required to answer a query before being able to proceed, make it clear to them what you have already carried out, and that you need a response before continuing. Additionally ensure that any incoming agents that may need to access the device have all of your notes and actions up to this point, so they can take over in your stead.

- Never reboot a device without permission, especially in cases where it appears that there is a chance that the device will not recover (which may be the case in situations where the networking is a non-optimal state). It is imperative in these cases that the customer is made aware that this could be an outcome in advance, and that they must explicitly give permission for a reboot to be carried out.

Occasionally it becomes very useful to copy files off from a device, so that they can be shared with the team. This might be logfiles, or the Supervisor database, etc.

A quick way of copying data from a device with a known UUID onto a local machine is to use SSH with your balena support username:

ssh -o LogLevel=ERROR -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -p 22 ${USER}@ssh.balena-devices.com host -s ${UUID} 'cat ${PATH_TO_FILE}' > ${LOCAL_PATH}You can copy data from your local machine onto a device by piping the file in instead:

ssh -o LogLevel=ERROR -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -p 22 ${USER}@ssh.balena-devices.com host -s ${UUID} 'cat > ${PATH_TO_FILE}' < ${LOCAL_PATH}4. Accessing a Device using a Gateway Device

It may not always be possible to access the device directly, especially if the the VPN component isn't working.

Usually, we're able to stay connected to the device when the OpenVPN service isn't running because we're using a development image, and development images allow passwordless root connections via SSH. Had we been running a production image, then the device would have been in the 'Offline' state, but it would still potentially have had network access and be otherwise running correctly. This brings up an issue though, how can we connect to a faulty production device in the field?

The answer comes from the mechanism behind how SSH is tunneled through the VPN, and we can actually use another device (in the 'Online' state) on the same local network as an 'Offline' device to do this.

You will need UUIDs of both the gateway ('Online') and target ('Offline') devices, as well as your username and, if possible, the IP address of the target device (by default, the last seen 'Online' state IP address will be used if the IP is not passed). Once you have these details, you can carry this out by executing the following on your host machine:

$ ssh -t \

-o LogLevel=ERROR \

-p 22 [email protected] hostvia $UUID_GATEWAY $UUID_TARGET [$IPADDR]Should this not work, it's possible that the IP address has changed (and if it has, you will need to specify the correct address). To find the potentially correct IP address is to SSH into the gateway device and run the following script (which should work for both legacy DropBear SSH daemons and those running on more recent balenaOS installations):

( prefix=192.168.1; \

for i in {2..254}; \

do \

addr=$prefix.$i; \

curl -s -m 1 $addr:22222 --http0.9 | grep -q "SSH-2.0" && echo $addr BALENA DEVICE || echo $addr; \

done \

)Ensure you change the prefix variable to the correct prefix for the local

network before starting. This script will then go through the range $prefix.2

to $prefix.254, and flag those devices it believes are potential balena

devices. This should help you narrow down the address range to try connections

to balena devices, substituting the IP address appropriately in the SSH

connection command.

All IP addresses will be printed by the script, but those

that are potentially balena devices will show up with BALENA DEVICE next to

them. If you have multiple potential UUIDs, you'll need to mix and match UUIDs

and IP addresses until you find a matching combination.

You may also try using mDNS from the gateway device to locate the IP of the

target based on its hostname. Simply ping the .local address and grab the IP

that way:

root@9294512:~# ping -c1 $(hostname).local

PING 9294512.local (172.17.0.1): 56 data bytes

64 bytes from 172.17.0.1: seq=0 ttl=64 time=0.400 ms

--- 9294512.local ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.400/0.400/0.400 ms

...5. Component Checklist

The key to any support is context. As a support agent, you should have enough context from a customer to start an investigation. If you do not, then you should ask for as much context and detail about the device as you can before starting an investigation on the device.

When accessing a device, there are usually some things you can check to see why a device may be in a broken state. Obviously, this depends on the symptoms a customer has reported, as well as those a support agent may have found when running the device diagnostics. However, there are some common issues that can occur to put a device into a broken state that can be quickly fixed.

The following sections discuss some of the first components to check when carrying out on-device support. The components that should be checked and in what order comes down to the context of support, and the symptoms seen.

5.1 Service Status and Journal Logs

balenaOS uses systemd as its init system, and as such almost all the fundamental components in balenaOS run as systemd services. systemd builds a dependency graph of all of its unit files (in which services are defined) to determine the order that these should be started/shutdown in. This is generated when systemd is run, although there are ways to rebuild this after startup and during normal system execution.

Possibly the most important command is journalctl, which allows you to read

the service's journal entries. This takes a variety of switches, the most

useful being:

--follow/-f- Continues displaying journal entries until the command is halted (eg. with Ctrl-C)--unit=<unitFile>/-u <unitFile>- Specifies the unit file to read journal entries for. Without this, all units entries are read.--pager-end/-e- Jump straight to the final entries for a unit.--all/-a- Show all entries, even if long or with unprintable characters. This is especially useful for displaying the service container logs from user containers when applied tobalena.service.

A typical example of using journalctl might be following a service to see

what's occuring. Here's it for the Supervisor, following journal entries in

real time:

root@9294512:~# journalctl --follow --unit=balena-supervisor

-- Journal begins at Fri 2021-08-06 14:40:59 UTC. --

Aug 18 16:56:55 9294512 balena-supervisor[6890]: [info] Reported current state to the cloud

Aug 18 16:57:05 9294512 balena-supervisor[6890]: [info] Reported current state to the cloud

Aug 18 16:58:17 9294512 balena-supervisor[6890]: [info] Reported current state to the cloud

Aug 18 16:58:27 9294512 balena-supervisor[6890]: [info] Reported current state to the cloud

Aug 18 16:58:37 9294512 balena-supervisor[6890]: [info] Reported current state to the cloud

Aug 18 16:58:48 9294512 balena-supervisor[6890]: [info] Reported current state to the cloud

Aug 18 16:58:58 9294512 balena-supervisor[6890]: [info] Reported current state to the cloud

Aug 18 16:59:19 9294512 balena-supervisor[6890]: [info] Reported current state to the cloud

Aug 18 16:59:40 9294512 balena-supervisor[6890]: [info] Reported current state to the cloud

Aug 18 17:00:00 9294512 balena-supervisor[6890]: [info] Reported current state to the cloudAny systemd service can be referenced in the same way, and there are some common commands that can be used with services:

systemctl status <serviceName>- Will show the status of a service. This includes whether it is currently loaded and/or enabled, if it is currently active (running) and when it was started, its PID, how much memory it is notionally (and beware here, this isn't always the amount of physical memory) using, the command used to run it and finally the last set of entries in its journal log. Here's example output from the OpenVPN service:

5.2 Persistent Logs

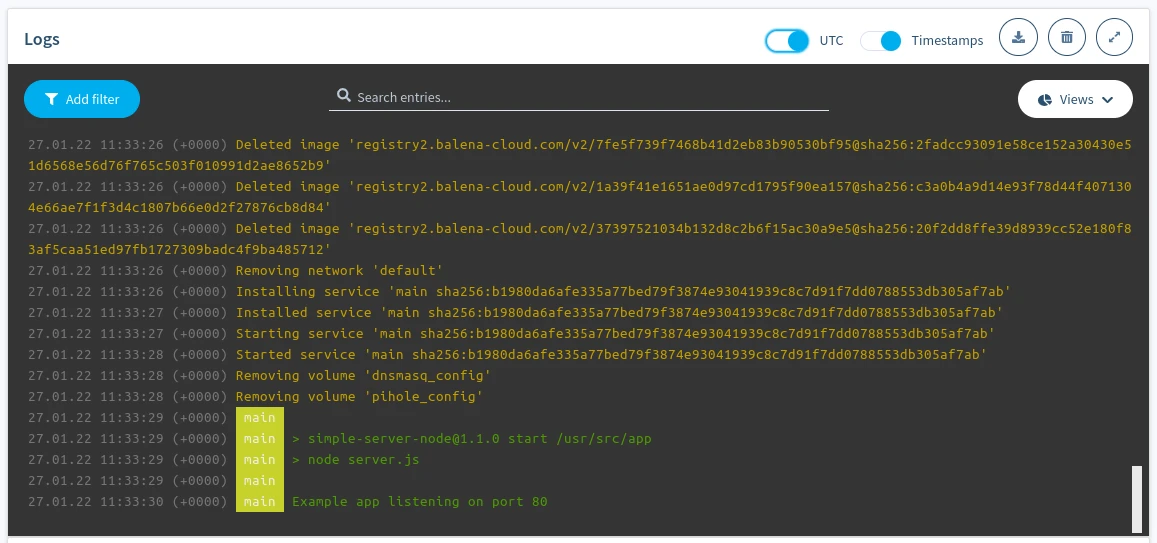

Device logging and the storage of device logs in balenaCloud is designed to be a debugging feature for balena devices. The Logs section in the balenaCloud dashboard can be used to view and download logs from the system and app services running on the device in real-time.

Device logs on the balenaCloud dashboard

Device logs contain anything written to stdout and stderr of app services and the system logs of the device. The dashboard allows logs to be cleared, filtered, searched, and viewed according to the browser timezone (or UTC).

The maximum limit of logs displayed on the dashboard is 1000 lines. This is also the amount of logs stored in the API and available for download. Device logging in balenaCloud isn't meant for long-term, reliable storage of logs. It's instead designed to provide the latest logs from the device for debugging purposes. We plan to expand our logging solution to offer long-term storage and search.

Persistent logging

The ability to read logs from the different system services running in balenaOS is vital in tracking issues. On reboot, these journal logs are cleared, and so examining them will not, for example, give any insight as to why the reboot may have occurred (or which services may have failed, causing a reboot). To alleviate this, balenaOS allows persistent journals (logs). Persistent logs provide vital insight into checking why a reboot occurred and help make debugging easier.

Persistent logging can be enabled using the Configuration tab on the sidebar for either a specific device or fleet-wide. Select 'Activate' to enable persistent logging on a specific device or on all devices in a fleet.

Since logs are stored in the data partition of the hostOS, the device(s) will reboot to activate persistent logging and apply the related settings.

Once persistent logging is enabled, the logs are stored as part of the data partition on the device (either on SD card, eMMC, hard disk, etc.). Logs are located on-device at /var/log/journal/<uuid>,where <uuid> matches the contents of the /etc/machine-id and is not related with the balena device UUID.

Journals can be read like those for any unit file, using journalctl, although the flags passed to the command are slightly different. Here's an example of how to read persistent journals:

root@dee2945:~# cd /var/log/journal/b9ccd869194e4f1381c06967f99b0265/

root@dee2945:/var/log/journal/b9ccd869194e4f1381c06967f99b0265# ls -l

total 2051

-rw-r----- 1 root root 1048576 Jan 13 11:05 system.journal

-rw-r----- 1 root root 1048576 Jan 13 11:05 system@2ad94f188fb64c2da9803557662b57b2-0000000000000001-00058b3468ac9625.journal

root@dee2945:/var/log/journal/b9ccd869194e4f1381c06967f99b0265# journalctl -a --file system.journal

-- Logs begin at Mon 2020-01-13 11:05:06 UTC, end at Mon 2020-01-13 11:05:37 UTC. --

Jan 13 11:05:06 dee2945 systemd-journald[490]: Time spent on flushing to /var is 65.151ms for 795 entries.

Jan 13 11:05:06 dee2945 systemd-journald[490]: System journal (/var/log/journal/b9ccd869194e4f1381c06967f99b0265) is 2.0M, max 8.0M, 5.9M free.

Jan 13 11:05:07 dee2945 systemd[1]: Started Resin persistent logs.

Jan 13 11:05:07 dee2945 resin-persistent-logs[670]: resin-persistent-logs: Persistent logging activated.

Jan 13 11:05:06 dee2945 kernel[664]: [ 14.553592] systemd-journald[490]: Received request to flush runtime journal from PID 1

Jan 13 11:05:07 dee2945 systemd[1]: Started Modem Manager.

Jan 13 11:05:07 dee2945 NetworkManager[740]: <info> [1578913507.2694] NetworkManager (version 1.18.0) is starting... (for the first time)

Jan 13 11:05:07 dee2945 NetworkManager[740]: <info> [1578913507.2698] Read config: /etc/NetworkManager/NetworkManager.conf (etc: os-networkmanager.conf)

Jan 13 11:05:07 dee2945 systemd[1]: Started Network Manager.

Jan 13 11:05:07 dee2945 NetworkManager[740]: <info> [1578913507.2862] bus-manager: acquired D-Bus service "org.freedesktop.NetworkManager"

Jan 13 11:05:07 dee2945 systemd[1]: Reached target Network.

Jan 13 11:05:07 dee2945 systemd[1]: Started OpenVPN.

Jan 13 11:05:07 dee2945 systemd[1]: Starting Resin init service...

Jan 13 11:05:07 dee2945 systemd[1]: Starting DNS forwarder and DHCP server...

Jan 13 11:05:07 dee2945 systemd[1]: Started OS configuration update service.

Jan 13 11:05:07 dee2945 NetworkManager[740]: <info> [1578913507.3047] manager[0x12ec000]: monitoring kernel firmware directory '/lib/firmware'.

Jan 13 11:05:07 dee2945 bash[758]: Board specific initialization...

Jan 13 11:05:07 dee2945 dnsmasq[759]: dnsmasq: syntax check OK.

Jan 13 11:05:07 dee2945 systemd[1]: Started DNS forwarder and DHCP server.

Jan 13 11:05:07 dee2945 systemd[1]: Starting Balena Application Container Engine...

Jan 13 11:05:07 dee2945 systemd[1]: Starting Resin proxy configuration service...

Jan 13 11:05:07 dee2945 dnsmasq[763]: dnsmasq[763]: started, version 2.78 cachesize 150

Jan 13 11:05:07 dee2945 dnsmasq[763]: dnsmasq[763]: compile time options: IPv6 GNU-getopt DBus no-i18n no-IDN DHCP DHCPv6 no-Lua TFTP no-conntrack ipset auth no-DNSSEC >

Jan 13 11:05:07 dee2945 dnsmasq[763]: dnsmasq[763]: DBus support enabled: connected to system bus

Jan 13 11:05:07 dee2945 dnsmasq[763]: dnsmasq[763]: reading /etc/resolv.dnsmasq

Jan 13 11:05:07 dee2945 dnsmasq[763]: dnsmasq[763]: using nameserver 8.8.8.8#53

Jan 13 11:05:07 dee2945 dnsmasq[763]: dnsmasq[763]: read /etc/hosts - 6 addresses

Jan 13 11:05:07 dee2945 dnsmasq[763]: dnsmasq[763]: using nameserver 8.8.8.8#53

Jan 13 11:05:07 dee2945 kernel: i2c /dev entries driver

Jan 13 11:05:07 dee2945 kernel[664]: [ 14.974497] i2c /dev entries driverIncreasing size of persistent logs store

Depending on the OS version, the size of persistent logs can be increased to store more logs than the default size (32 MB currently). This can be done by adjusting the SystemMaxUse= setting in /etc/systemd/journald.conf.d/journald-balena-os.conf (refer to journald.conf docs for more information). Mistakes in the configuration can cause hard-to-solve problems, hence we recommend testing the changes on development test devices first.

We consider changes to the size of persistent log store to be a temporary debugging tool, not a long-term solution. In particular, changes made to journald-balena-os.conf will be overwritten when balenaOS is updated.

Do keep in mind persistent logging increases the wear on the storage medium due to increased writes. Refer to long term storage of device logs for ways to offset this.

Long term device logs storage

If you are dealing with excessive logs, persistent logging might not be a reliable long-term solution. Persistent logging increases writes on the device's storage media, which results in higher wear and tear over time.

Instead, device logs can be streamed using the supervisor API. Refer to the Supervisor's journald endpoint. Through this endpoint, device logs can be streamed to whichever cloud monitoring platform you use to store logs reliably over time. For example, using a solution like Datadog.

6. Determining Networking Issues

There are some common networking issues that can stop several major components (VPN, Supervisor, balenaEngine) from working correctly.

The first thing to check would be confirming if the networking requirements are being met.

Additionally, services running on the device themselves may have networking requirements which may not be met. For example, a service may need to send data to a server, but the server is offline and unreachable, and maybe the service isn't designed to handle these failures.

In general, debugging networking issues also gets easier the more you experiment with making changes to networking interfaces and routes on a device. The following sections describe some of the more common network failure modes, as well as how to track them down. Be aware that several of these problems may also occur at the same time, with one problem causing others to appear. For example, NTP failure could stop the date from being correct, which can lead to the VPN failing to connect (as the certificate is probably not yet date-valid) as well as the Supervisor failing to download updates (for the same reason).

6.1 NTP Failure

Service: chronyd.service

CLI: chronyc

Network Time Protocol is important because it allows devices without RTCs (Real-Time Clocks) to retrieve the correct date and time from Internet based servers that run on an extremely fine granularity. At first glance, this may not seem significant, but devices such as the Raspberry Pi do not include an RTC and so when balenaOS is first booted, it sets the time when the release of the OS was built. Clearly, this could be days, weeks, months or even years behind the current time. Because almost all the balena services that work with applications work with balenaCloud endpoints, it is extremely important that the date and time be set correctly, else SSL-based connections (including HTTPS connections) will be rejected because the certificates presented will appear to be date invalid when compared to the system clock.

Depending on how skewed the device date is from the real date, this can manifest as several different issues:

- Refusal to connect to the balenaCloud VPN

- Refusal to download configuration from the API

- Refusal by the Supervisor to download the latest release

Examining the status of the chronyd service can show these symptoms, along

with the simple date command:

root@9294512:~# date

Fri Aug 19 09:11:43 UTC 2022If the date reported by the device differs to the current date and time, then there is most probably a problem with the time service.

Before continuing with this exercise, reboot or power down/power up and wait for it to come online before SSHing into it.

Ensure you know the local IP address of the debug device (or use balena scan

to find the hostname of your device), and SSH into it like this (where

10.0.0.197 is the IP address of your device, or <host>.local name):

$ balena ssh 10.0.0.197

Last login: Fri Aug 19 09:09:37 2022

root@9294512:~#Your device should be connected, bring up the Dashboard page for the device. It should be 'Online' and running the pushed release code.

We'll demonstrate an NTP failure by making some manual changes to the date:

root@9294512:~# date -s "23 MAR 2017 12:00:00"

Thu Mar 23 12:00:00 UTC 2017

root@9294512:~# systemctl restart openvpnAlmost immediately, you'll see that the device status moves to 'Online (Heartbeat only)'. So, why has this happened? Wait until the device comes back online, then ssh back in and take a look in the OpenVPN logs:

root@9294512:~# journalctl --follow -n 300 -u openvpn.service

-- Journal begins at Thu 2017-03-23 12:00:02 UTC. --

Mar 23 12:00:24 9294512 openvpn[135086]: Thu Mar 23 12:00:24 2017 Attempting to establish TCP connection with [AF_INET]34.226.166.12:443 [nonblock]

Mar 23 12:00:25 9294512 openvpn[135086]: Thu Mar 23 12:00:25 2017 TCP connection established with [AF_INET]34.226.166.12:443

Mar 23 12:00:25 9294512 openvpn[135086]: Thu Mar 23 12:00:25 2017 TCP_CLIENT link local: (not bound)

Mar 23 12:00:25 9294512 openvpn[135086]: Thu Mar 23 12:00:25 2017 TCP_CLIENT link remote: [AF_INET]34.226.166.12:443

Mar 23 12:00:25 9294512 openvpn[135086]: Thu Mar 23 12:00:25 2017 TLS: Initial packet from [AF_INET]34.226.166.12:443, sid=416e63eb ed87172e

Mar 23 12:00:25 9294512 openvpn[135086]: Thu Mar 23 12:00:25 2017 VERIFY ERROR: depth=1, error=certificate is not yet valid: C=US, ST=WA, L=Seattle, O=balena.io, OU=balenaCloud, CN=open-balena-vpn-rootCA

Mar 23 12:00:25 9294512 openvpn[135086]: Thu Mar 23 12:00:25 2017 OpenSSL: error:1416F086:SSL routines:tls_process_server_certificate:certificate verify failed

Mar 23 12:00:25 9294512 openvpn[135086]: Thu Mar 23 12:00:25 2017 TLS_ERROR: BIO read tls_read_plaintext error

Mar 23 12:00:25 9294512 openvpn[135086]: Thu Mar 23 12:00:25 2017 TLS Error: TLS object -> incoming plaintext read error

Mar 23 12:00:25 9294512 openvpn[135086]: Thu Mar 23 12:00:25 2017 TLS Error: TLS handshake failed

Mar 23 12:00:25 9294512 openvpn[135086]: Thu Mar 23 12:00:25 2017 Fatal TLS error (check_tls_errors_co), restarting

Mar 23 12:00:25 9294512 openvpn[135086]: Thu Mar 23 12:00:25 2017 SIGUSR1[soft,tls-error] received, process restarting

Mar 23 12:00:25 9294512 openvpn[135086]: Thu Mar 23 12:00:25 2017 Restart pause, 5 second(s)

Mar 23 12:00:30 9294512 openvpn[135086]: Thu Mar 23 12:00:30 2017 NOTE: the current --script-security setting may allow this configuration to call user-defined scripts

Mar 23 12:00:30 9294512 openvpn[135086]: Thu Mar 23 12:00:30 2017 TCP/UDP: Preserving recently used remote address: [AF_INET]3.225.166.106:443

Mar 23 12:00:30 9294512 openvpn[135086]: Thu Mar 23 12:00:30 2017 Socket Buffers: R=[131072->131072] S=[16384->16384]There's a bit to wade through here, but the first line shows the OpenVPN

successfully finalizing a connection to the balenaCloud VPN backend. However,

we then see our manual restart of the openvpn.service unit file

(Mar 23 12:00:06 9294512 openvpn[2639]: Thu Mar 23 12:00:06 2017 SIGTERM[hard,] received, process exiting)

and then it starting up again. But whilst it initializes, you'll note that

whilst trying to connect it found a problem in the verification stage:

Aug 18 10:51:45 9294512 openvpn[2639]: Thu Aug 18 10:51:45 2022 VERIFY OK: depth=0, C=US, ST=WA, L=Seattle, O=balena.io, OU=balenaCloud, CN=vpn.balena-cloud.com

Mar 23 12:00:07 9294512 openvpn[135086]: Thu Mar 23 12:00:07 2017 VERIFY ERROR: depth=1, error=certificate is not yet valid: C=US, ST=WA, L=Seattle, O=balena.io, OU=balenaCloud, CN=open-balena-vpn-rootCA

Mar 23 12:00:07 9294512 openvpn[135086]: Thu Mar 23 12:00:07 2017 OpenSSL: error:1416F086:SSL routines:tls_process_server_certificate:certificate verify failedThe certificate that it fetched from the balenaCloud VPN service is not yet valid. This is because SSL certificates have "valid from" and "valid to" dates. These ensure that they can only be used in a particular time window, and if the current time falls outside of that window then any connection using them is invalid. In this case, because we've set the time back to 2017, the date doesn't fall within that window and the connection is aborted by the client.

But if the date is incorrect, why has the device reconnected? Run the following on the device:

root@9294512:~# date

Fri Aug 19 09:25:45 UTC 2022So the date's actually now correct. This is because the NTP service

(chronyd.service) has eventually noticed that there's a mismatch in the

set time on the device, and the time from one of it's sources. Let's look at

the journal for it:

root@9294512:~# journalctl --no-pager -u chronyd.service

Aug 18 10:51:01 9294512 healthdog[2011]: 2022-08-18T10:51:01Z chronyd version 4.0 starting (+CMDMON +NTP +REFCLOCK +RTC -PRIVDROP -SCFILTER -SIGND +ASYNCDNS -NTS -SECHASH +IPV6 -DEBUG)

Mar 23 12:01:09 9294512 healthdog[2011]: 2017-03-23T12:01:09Z Backward time jump detected!

Mar 23 12:01:09 9294512 healthdog[2011]: 2017-03-23T12:01:09Z Cannot synchronize: no selectable sources

Mar 23 12:01:19 9294512 healthdog[135156]: [chrony-healthcheck][INFO] No online NTP sources - forcing poll

Mar 23 12:01:19 9294512 healthdog[2011]: 2017-03-23T12:01:19Z System clock was stepped by -0.000000 seconds

Mar 23 12:01:25 9294512 healthdog[2011]: 2017-03-23T12:01:25Z Selected source 198.199.14.101 (0.resinio.pool.ntp.org)

Mar 23 12:01:25 9294512 healthdog[2011]: 2017-03-23T12:01:25Z System clock wrong by 170630052.229025 seconds

Aug 19 09:15:37 9294512 healthdog[2011]: 2022-08-19T09:15:37Z System clock was stepped by 170630052.229025 secondsAs you can see, it selected a source and set the time back to the correct current time. This had a knock on effect, in that the openvpn service (VPN/cloudlink) reattempted to connect to the backend:

root@9294512:~# journalctl -f -n 100 -u openvpn

Mar 23 12:01:21 9294512 openvpn[135086]: Thu Mar 23 12:01:21 2017 Fatal TLS error (check_tls_errors_co), restarting

Mar 23 12:01:21 9294512 openvpn[135086]: Thu Mar 23 12:01:21 2017 SIGUSR1[soft,tls-error] received, process restarting

Mar 23 12:01:21 9294512 openvpn[135086]: Thu Mar 23 12:01:21 2017 Restart pause, 5 second(s)

Aug 19 09:15:39 9294512 openvpn[135086]: Fri Aug 19 09:15:39 2022 NOTE: the current --script-security setting may allow this configuration to call user-defined scripts

Aug 19 09:15:39 9294512 openvpn[135086]: Fri Aug 19 09:15:39 2022 TCP/UDP: Preserving recently used remote address: [AF_INET6]2600:1f18:6600:7f02:bda3:7af0:e21:425b:443

Aug 19 09:15:39 9294512 openvpn[135086]: Fri Aug 19 09:15:39 2022 Socket Buffers: R=[131072->131072] S=[16384->16384]

Aug 19 09:15:39 9294512 openvpn[135086]: Fri Aug 19 09:15:39 2022 Attempting to establish TCP connection with [AF_INET6]2600:1f18:6600:7f02:bda3:7af0:e21:425b:443 [nonblock]

Aug 19 09:15:40 9294512 openvpn[135086]: Fri Aug 19 09:15:40 2022 TCP connection established with [AF_INET6]2600:1f18:6600:7f02:bda3:7af0:e21:425b:443

Aug 19 09:15:40 9294512 openvpn[135086]: Fri Aug 19 09:15:40 2022 TCP_CLIENT link local: (not bound)

Aug 19 09:15:40 9294512 openvpn[135086]: Fri Aug 19 09:15:40 2022 TCP_CLIENT link remote: [AF_INET6]2600:1f18:6600:7f02:bda3:7af0:e21:425b:443

Aug 19 09:15:40 9294512 openvpn[135086]: Fri Aug 19 09:15:40 2022 TLS: Initial packet from [AF_INET6]2600:1f18:6600:7f02:bda3:7af0:e21:425b:443, sid=b355e635 6170b76f

Aug 19 09:15:40 9294512 openvpn[135086]: Fri Aug 19 09:15:40 2022 VERIFY OK: depth=1, C=US, ST=WA, L=Seattle, O=balena.io, OU=balenaCloud, CN=open-balena-vpn-rootCA

Aug 19 09:15:40 9294512 openvpn[135086]: Fri Aug 19 09:15:40 2022 VERIFY KU OK

Aug 19 09:15:40 9294512 openvpn[135086]: Fri Aug 19 09:15:40 2022 Validating certificate extended key usage

Aug 19 09:15:40 9294512 openvpn[135086]: Fri Aug 19 09:15:40 2022 ++ Certificate has EKU (str) TLS Web Server Authentication, expects TLS Web Server Authentication

Aug 19 09:15:40 9294512 openvpn[135086]: Fri Aug 19 09:15:40 2022 VERIFY EKU OK

Aug 19 09:15:40 9294512 openvpn[135086]: Fri Aug 19 09:15:40 2022 VERIFY OK: depth=0, C=US, ST=WA, L=Seattle, O=balena.io, OU=balenaCloud, CN=vpn.balena-cloud.comThis time the connection has been verified and the device has come online. This

shows what might have happened if someone had manually set the date, but what

happens if it doesn't recover? This is a good example of where the NTP port

might have been blocked, so that the device couldn't sync it's time. It might

also be possible that the chronyd.service unit may have crashed or stopped

for some reason, and restarting it would solve the issue.

Changes such as this don't just affect the online status of the device. Let's stop the chrony service completely (so it can't correctly resync the time) and change the date again:

root@9294512:~# systemctl stop chronyd.service

root@9294512:~# date -s "23 MAR 2017 12:00:00"Now from your development machine, again push the source code from the

multicontainer-app directory:

$ balena push DebugFleetOnce the build has completed, the device should try and download the updated release. However, you'll notice that the download doesn't start and no changes are made. The Dashboard stays static. Why is this? Well as you've probably guessed, it's for the same reasons that the VPN connection doesn't work. Run the following on your device:

root@9294512:~# journalctl -n 100 --no-pager -u balena-supervisor

-- Journal begins at Thu 2017-03-23 12:00:00 UTC, ends at Fri 2022-08-19 09:33:22 UTC. --

Aug 19 07:25:20 9294512 balena-supervisor[6890]: [debug] Attempting container log timestamp flush...

Aug 19 07:25:20 9294512 balena-supervisor[6890]: [debug] Container log timestamp flush complete

Aug 19 07:27:06 9294512 balena-supervisor[6890]: [api] GET /v1/healthy 200 - 3.025 ms

Mar 23 12:00:02 9294512 balena-supervisor[6890]: [info] Retrying current state report in 15 seconds

Mar 23 12:00:02 9294512 balena-supervisor[6890]: [event] Event: Device state report failure {"error":"certificate is not yet valid"}

Mar 23 12:00:06 9294512 balena-supervisor[6890]: [info] VPN connection is not active.As you can see, the certificate is again not valid as the current device time does not fall within the validity window, and so the Supervisor won't pull the latest release. If we restart chrony, this will be rectified and the Supervisor will, after a short delay, start the update:

root@9294512:~# systemctl start chronyd.service

root@9294512:~# journalctl -f -u balena-supervisor

-- Journal begins at Thu 2017-03-23 12:00:20 UTC. --

Mar 23 12:04:08 9294512 balena-supervisor[6890]: [info] Retrying current state report in 240 seconds

Mar 23 12:04:08 9294512 balena-supervisor[6890]: [event] Event: Device state report failure {"error":"certificate is not yet valid"}

Aug 19 09:55:20 9294512 balena-supervisor[6890]: [debug] Attempting container log timestamp flush...

Aug 19 09:55:20 9294512 balena-supervisor[6890]: [debug] Container log timestamp flush complete

Aug 19 09:55:41 9294512 balena-supervisor[6890]: [api] GET /v1/device 200 - 24.495 ms

Aug 19 09:55:55 9294512 balena-supervisor[6890]: [info] Reported current state to the cloud

Aug 19 09:56:48 9294512 balena-supervisor[6890]: [info] Applying target state

Aug 19 09:56:49 9294512 balena-supervisor[6890]: [event] Event: Image removal {"image":{"name":"registry2.balena-cloud.com/v2/876c57f4b5dde80de9b0d01e325bcbfe@sha256:6ebc43e800b347004ec6945806b12d4111a2450f63544366c9961fab0caac2cd","appId":1958513,"serviceId":1706634,"serviceName":"backend","imageId":5298767,"releaseId":2265189,"dependent":0,"appUuid":"85e44a78a40a4d78ae1243caca2424dc","commit":"4101191493a1ffc54bec9101e045bacf"}}

Aug 19 09:56:49 9294512 balena-supervisor[6890]: [event] Event: Image removal {"image":{"name":"registry2.balena-cloud.com/v2/e40d992f529b1567b0f2cc63f9fa877a@sha256:45c002b1bb325c1b93ff333a82ff401c9ba55ca7d00118b31a1c992f6fc5a4a4","appId":1958513,"serviceId":1706633,"serviceName":"frontend","imageId":5298766,"releaseId":2265189,"dependent":0,"appUuid":"85e44a78a40a4d78ae1243caca2424dc","commit":"4101191493a1ffc54bec9101e045bacf"}}

Aug 19 09:56:50 9294512 balena-supervisor[6890]: [debug] Finished applying target state

Aug 19 09:56:50 9294512 balena-supervisor[6890]: [success] Device state apply success

Aug 19 09:56:58 9294512 balena-supervisor[6890]: [info] Reported current state to the cloud

Aug 19 09:57:20 9294512 balena-supervisor[6890]: [api] GET /v1/healthy 200 - 4.198 ms

...This shows the importance of a working service such as timesetting, and how this can affect the system as a whole. As a note, be aware that not every device relies completely on NTP. Some devices, such as an Intel NUC, also have battery backed services including an RTC, which means that even if NTP is not working the time may look at first glance as though it's correct. However, if NTP is not operational even an RTC will eventually suffer from clock skew (the slow movement away from the real time due to drift) which will eventually cause issues.

chronyc is a command-line utility that can be used to interoperate with the

NTP daemon. chronyc has many commands, the most useful are:

sources- A list of all the current NTP sources being used by the NTP daemon.reselect- Forces the NTP daemon to reselect the best time synchronization source.tracking- Information about the system clock itself, including skew.ntpdata- Detailed information on all the current NTP sources.

6.2 DNS Issues

Service: dnsmasq.service

DNS (Domain Name Service) functionality allows the IP address of a hostname to be retrieved for use by anything that uses endpoints on the Internet (that isn't specified by an IP address). Any executable that uses a hostname to make a connection to that host always uses DNS to get the IP address to make that connection.

For this reason, DNS is vital in the reliable operation of a balena device as it

provides the ability to lookup *.balena-cloud.com hostnames to allow the

download of releases, reporting the device state, connection to the VPN,

etc.

DNS is provided by the dnsmasq.service unit, which uses a default

configuration located at /etc/dnsmasq.conf and a list of nameservices

in /etc/resolv.dnsmasq. This itself is derived from the

/var/run/resolvconf/interface/NetworkManager file.

The DNSMasq service runs at local address 127.0.0.2. This is used, because

it allows customer services to provide their own DNS if required

and therefore does not clash with them.

By default, the external name servers used are the Google primary and secondary

at 8.8.8.8 and 8.8.4.4. However, these can be overridden by modifying the

/mnt/boot/config.json file and adding a dnsServers property, with a comma

separated list of the IP addresses of the nameservers to use (see

the docs for more

information).

SSH into your device using it's local IP address:

$ balena ssh 10.0.0.197or using it's UUID:

$ balena ssh 9294512We're going to modify the DNS servers to point at one that doesn't exist, just to show what happens. SSH into your device as above, then run the following:

root@9294512:~# jq '.dnsServers = "1.2.3.4"' /mnt/boot/config.json > /mnt/data/config.json && mv /mnt/data/config.json /mnt/boot/config.json

root@9294512:~# mount -o remount,rw /

root@9294512:~# mv /etc/resolv.dnsmasq /etc/resolv.dnsmasq.moved

root@9294512:~# sync

root@9294512:~# rebootThis will move the default DNSMasq resolver config file, so that it's not

picked up. Additionally, it will modify the configuration to set the nameserver

to use as 1.2.3.4. As this isn't a valid nameserver, nothing will get the

right address to make connections. Note that usually, remounting the root FS

as writeable is a very risky move, and should not be carried out without good

reason!

After a while, once the device has rebooted, SSH back into the device using the local IP address, and look

at the dnsmasq.service unit:

root@9294512:~# systemctl status dnsmasq

● dnsmasq.service - DNS forwarder and DHCP server

Loaded: loaded (/lib/systemd/system/dnsmasq.service; enabled; vendor preset: enabled)

Drop-In: /etc/systemd/system/dnsmasq.service.d

└─dnsmasq.conf

Active: active (running) since Fri 2022-08-19 10:11:53 UTC; 35s ago

Process: 1791 ExecStartPre=/usr/bin/dnsmasq --test (code=exited, status=0/SUCCESS)

Main PID: 1792 (dnsmasq)

Tasks: 1 (limit: 1878)

Memory: 344.0K

CGroup: /system.slice/dnsmasq.service

└─1792 /usr/bin/dnsmasq -x /run/dnsmasq.pid -a 127.0.0.2,10.114.102.1 -7 /etc/dnsmasq.d/ -r /etc/resolv.dnsmasq -z --servers-file=/run/dnsmasq.servers -k --lo>

Aug 19 10:11:53 9294512 dnsmasq[1791]: dnsmasq: syntax check OK.

Aug 19 10:11:53 9294512 dnsmasq[1792]: dnsmasq[1792]: started, version 2.84rc2 cachesize 150

Aug 19 10:11:53 9294512 dnsmasq[1792]: dnsmasq[1792]: compile time options: IPv6 GNU-getopt DBus no-UBus no-i18n no-IDN DHCP DHCPv6 no-Lua TFTP no-conntrack ipset auth no->

Aug 19 10:11:53 9294512 dnsmasq[1792]: dnsmasq[1792]: DBus support enabled: connected to system bus

Aug 19 10:11:53 9294512 dnsmasq[1792]: dnsmasq[1792]: read /etc/hosts - 6 addresses

Aug 19 10:11:53 9294512 dnsmasq[1792]: dnsmasq[1792]: using nameserver 1.2.3.4#53

Aug 19 10:12:06 9294512 dnsmasq[1792]: dnsmasq[1792]: failed to access /etc/resolv.dnsmasq: No such file or directoryAs you can see, it's tried, and failed to get the /etc/resolv.dnsmasq file

and has just the one nameserver to use, 1.2.3.4.

Now let's look at the Supervisor:

root@9294512:~# systemctl status balena-supervisor

● balena-supervisor.service - Balena supervisor

Loaded: loaded (/lib/systemd/system/balena-supervisor.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2022-08-19 10:12:12 UTC; 1min 5s ago

Process: 2213 ExecStartPre=/usr/bin/balena stop resin_supervisor (code=exited, status=1/FAILURE)

Process: 2239 ExecStartPre=/usr/bin/balena stop balena_supervisor (code=exited, status=0/SUCCESS)

Process: 2258 ExecStartPre=/bin/systemctl is-active balena.service (code=exited, status=0/SUCCESS)

Main PID: 2259 (start-balena-su)

Tasks: 11 (limit: 1878)

Memory: 12.4M

CGroup: /system.slice/balena-supervisor.service

├─2259 /bin/sh /usr/bin/start-balena-supervisor

├─2261 /proc/self/exe --healthcheck /usr/lib/balena-supervisor/balena-supervisor-healthcheck --pid 2259

└─2405 balena start --attach balena_supervisor

Aug 19 10:12:19 9294512 balena-supervisor[2405]: [info] Waiting for connectivity...

Aug 19 10:12:19 9294512 balena-supervisor[2405]: [debug] Starting current state report

Aug 19 10:12:19 9294512 balena-supervisor[2405]: [debug] Starting target state poll

Aug 19 10:12:19 9294512 balena-supervisor[2405]: [debug] Spawning journald with: chroot /mnt/root journalctl -a --follow -o json _SYSTEMD_UNIT=balena.service

Aug 19 10:12:20 9294512 balena-supervisor[2405]: [debug] Finished applying target state

Aug 19 10:12:20 9294512 balena-supervisor[2405]: [success] Device state apply success

Aug 19 10:12:34 9294512 balena-supervisor[2405]: [error] LogBackend: unexpected error: Error: getaddrinfo EAI_AGAIN api.balena-cloud.com

Aug 19 10:12:34 9294512 balena-supervisor[2405]: [error] at GetAddrInfoReqWrap.onlookupall [as oncomplete] (dns.js:76:26)

Aug 19 10:12:49 9294512 balena-supervisor[2405]: [info] Retrying current state report in 15 seconds

Aug 19 10:12:49 9294512 balena-supervisor[2405]: [event] Event: Device state report failure {"error":"getaddrinfo EAI_AGAIN api.balena-cloud.com"}As you can see, the Supervisor is not at all happy, unable to connect to the API

and failing to get the current target state. This is because it is unable to get

an IP address for api.balena-cloud.com.

Worst still, OpenVPN will not be able to resolve the VPN hostname, and so the

device will have dropped 'Offline' (check the Dashboard or use balena devices)

to verify this.

Many other services will be in the same state. A good test of whether DNS is working is to try to get to a known service on the internet, including balenaCloud and Google:

root@9294512:~# curl https://google.com

curl: (6) Could not resolve host: google.com

root@9294512:~# curl https://api.balena-cloud.com/ping

curl: (6) Could not resolve host: api.balena-cloud.comBoth of these should succeed if DNS is working, but as you can see, both give

a Could not resolve host error. This is an extremely good pointer that DNS

is failing.

One thing to be aware of is that sometimes DNS fails not because of an invalid server, but because the traffic for port 53 (the DNS port) is being firewalled (see later section).

For now, we're going to put the DNS service back how it should be:

root@9294512:~# mount -o remount,rw /

root@9294512:~# mv /etc/resolv.dnsmasq.moved /etc/resolv.dnsmasq

root@9294512:~# jq -M 'del(.dnsServers)' /mnt/boot/config.json > /mnt/data/config.json && mv /mnt/data/config.json /mnt/boot/config.json

root@9294512:~# sync

root@9294512:~# rebootThis will put the device back into its previously working DNS state, and it will reconnect to the network.

6.3 OpenVPN

Services: openvpn.service, os-config.service

Device connections to balenaCloud vary depending on the operation being carried out, for example registering the device is carried out by the Supervisor contacting the API endpoint directly.

However, a large part of the cloud-to-device functionality is tunneled by the balena VPN. This include various data such as the device status, actions, SSH access, public URLs etc.

Initially, the os-config.service unit requests a block of configuration data

from the API, once the device has been registered against the fleet. Let's

have a look at the journal output from a device that's been freshly provisioned

and started for the first time:

root@f220105:~# journalctl -f -n 300 -u os-config

-- Journal begins at Fri 2021-08-06 14:40:59 UTC. --

Aug 06 14:41:03 localhost os-config[1610]: Fetching service configuration from https://api.balena-cloud.com/os/v1/config...

Aug 06 14:41:03 localhost os-config[1610]: https://api.balena-cloud.com/os/v1/config: error trying to connect: failed to lookup address information: Name or service not known

Aug 06 14:41:09 f220105 os-config[1610]: https://api.balena-cloud.com/os/v1/config: error trying to connect: error:1416F086:SSL routines:tls_process_server_certificate:certificate verify failed:../openssl-1.1.1l/ssl/statem/statem_clnt.c:1914: (certificate is not yet valid)

Aug 19 10:24:11 f220105 os-config[1610]: Service configuration retrieved

Aug 19 10:24:11 f220105 os-config[1610]: Stopping balena-supervisor.service...

Aug 19 10:24:11 f220105 os-config[1610]: Awaiting balena-supervisor.service to exit...

Aug 19 10:24:11 f220105 os-config[1610]: Stopping prepare-openvpn.service...

Aug 19 10:24:11 f220105 os-config[1610]: Stopping openvpn.service...

Aug 19 10:24:11 f220105 os-config[1610]: Awaiting prepare-openvpn.service to exit...

Aug 19 10:24:11 f220105 os-config[1610]: Awaiting openvpn.service to exit...

Aug 19 10:24:11 f220105 os-config[1610]: /etc/openvpn/ca.crt updated

Aug 19 10:24:11 f220105 os-config[1610]: /etc/openvpn/openvpn.conf updated

Aug 19 10:24:11 f220105 os-config[1610]: Starting prepare-openvpn.service...

Aug 19 10:24:11 f220105 os-config[1610]: Starting openvpn.service...

Aug 19 10:24:11 f220105 os-config[1610]: /home/root/.ssh/authorized_keys_remote updated

Aug 19 10:24:11 f220105 os-config[1610]: Starting balena-supervisor.service...

...You can see that, once registered, the os-config service requested the

configuration for the device from the API, received it, and then used the

returned data to:

- Stop the Supervisor.

- Write the new OpenVPN configuration to the state partition.

- Write the correct root CA for the VPN to the state partition.

- Restarted the OpenVPN service.

- Updated the authorized keys.

Quick Note: Customers can also specify their own keys to access devices

(both development and production) in a couple of ways. The first is adding

an os.sshKeys[] property, which is an array of public keys, to the

/mnt/boot/config.json file. There is also upcoming support for user

custom keys being added to the API backend.

As you can see, the OpenVPN configuration and Certificate Authority certificate is fetched from the API and not baked in. This allows balena to update their certificates, configurations and keys on the fly, ensuring we can tailor the VPN for the best possible experience and security. However, this does require that the API endpoint is available to periodically refresh the config.

So, what happens if the API isn't available? If this occurs on first boot, then the device wouldn't be able to register, so there wouldn't be a configuration to fetch for it.

On subsequent boots, the API not being initially available isn't as much of an

issue, because there is already a configuration and certificate for the VPN

which can be used until os-config can contact the API to check for new

configurations (and it is unlikely to have changed in the meantime).

Let's now look at the current OpenVPN journal entries on your device. SSH into the device:

$ balena ssh 10.0.0.197

root@f220105:~# journalctl -f -n 200 -u openvpn.service

-- Journal begins at Fri 2021-08-06 14:40:59 UTC. --

Aug 19 10:24:53 f220105 openvpn[2632]: Fri Aug 19 10:24:53 2022 OpenVPN 2.4.7 aarch64-poky-linux-gnu [SSL (OpenSSL)] [LZO] [LZ4] [EPOLL] [MH/PKTINFO] [AEAD] built on Feb 20 2019

Aug 19 10:24:53 f220105 openvpn[2632]: Fri Aug 19 10:24:53 2022 library versions: OpenSSL 1.1.1l 24 Aug 2021, LZO 2.10

Aug 19 10:24:53 f220105 openvpn[2632]: Fri Aug 19 10:24:53 2022 NOTE: the current --script-security setting may allow this configuration to call user-defined scripts

Aug 19 10:24:53 f220105 openvpn[2632]: Fri Aug 19 10:24:53 2022 TCP/UDP: Preserving recently used remote address: [AF_INET6]2600:1f18:6600:7f01:dc24:54f2:d95f:abc0:443

Aug 19 10:24:53 f220105 openvpn[2632]: Fri Aug 19 10:24:53 2022 Socket Buffers: R=[131072->131072] S=[16384->16384]

Aug 19 10:24:53 f220105 openvpn[2632]: Fri Aug 19 10:24:53 2022 Attempting to establish TCP connection with [AF_INET6]2600:1f18:6600:7f01:dc24:54f2:d95f:abc0:443 [nonblock]

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 TCP connection established with [AF_INET6]2600:1f18:6600:7f01:dc24:54f2:d95f:abc0:443

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 TCP_CLIENT link local: (not bound)

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 TCP_CLIENT link remote: [AF_INET6]2600:1f18:6600:7f01:dc24:54f2:d95f:abc0:443

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 NOTE: UID/GID downgrade will be delayed because of --client, --pull, or --up-delay

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 TLS: Initial packet from [AF_INET6]2600:1f18:6600:7f01:dc24:54f2:d95f:abc0:443, sid=f63c5c5a 9d0382c8

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 WARNING: this configuration may cache passwords in memory -- use the auth-nocache option to prevent this

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 VERIFY OK: depth=1, C=US, ST=WA, L=Seattle, O=balena.io, OU=balenaCloud, CN=open-balena-vpn-rootCA

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 VERIFY KU OK

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 Validating certificate extended key usage

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 ++ Certificate has EKU (str) TLS Web Server Authentication, expects TLS Web Server Authentication

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 VERIFY EKU OK

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 VERIFY OK: depth=0, C=US, ST=WA, L=Seattle, O=balena.io, OU=balenaCloud, CN=vpn.balena-cloud.com

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 Control Channel: TLSv1.3, cipher TLSv1.3 TLS_AES_256_GCM_SHA384, 2048 bit RSA

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 [vpn.balena-cloud.com] Peer Connection Initiated with [AF_INET6]2600:1f18:6600:7f01:dc24:54f2:d95f:abc0:443

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 SENT CONTROL [vpn.balena-cloud.com]: 'PUSH_REQUEST' (status=1)

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 PUSH: Received control message: 'PUSH_REPLY,sndbuf 0,rcvbuf 0,route 52.4.252.97,ping 10,ping-restart 60,socket-flags TCP_NODELAY,ifconfig 10.242.111.185 52.4.252.97,peer-id 0,cipher AES-128-GCM'

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 OPTIONS IMPORT: timers and/or timeouts modified

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 OPTIONS IMPORT: --sndbuf/--rcvbuf options modified

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 Socket Buffers: R=[131072->131072] S=[87040->87040]

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 OPTIONS IMPORT: --socket-flags option modified

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 Socket flags: TCP_NODELAY=1 succeeded

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 OPTIONS IMPORT: --ifconfig/up options modified

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 OPTIONS IMPORT: route options modified

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 OPTIONS IMPORT: peer-id set

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 OPTIONS IMPORT: adjusting link_mtu to 1627

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 OPTIONS IMPORT: data channel crypto options modified

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 Data Channel: using negotiated cipher 'AES-128-GCM'

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 Outgoing Data Channel: Cipher 'AES-128-GCM' initialized with 128 bit key

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 Incoming Data Channel: Cipher 'AES-128-GCM' initialized with 128 bit key

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 ROUTE_GATEWAY 10.0.0.1/255.255.255.0 IFACE=eth0 HWADDR=dc:a6:32:9e:18:dd

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 TUN/TAP device resin-vpn opened

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 TUN/TAP TX queue length set to 100

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 /sbin/ip link set dev resin-vpn up mtu 1500

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 /sbin/ip addr add dev resin-vpn local 10.242.111.185 peer 52.4.252.97

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 /etc/openvpn-misc/upscript.sh resin-vpn 1500 1555 10.242.111.185 52.4.252.97 init

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 /sbin/ip route add 52.4.252.97/32 via 52.4.252.97

Aug 19 10:24:55 f220105 openvpn[2656]: ip: RTNETLINK answers: File exists

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 ERROR: Linux route add command failed: external program exited with error status: 2

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 GID set to openvpn

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 UID set to openvpn

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 Initialization Sequence CompletedThere's a lot to take in here, but there are some key lines here that show that the VPN has negotiated with the backend and is connected and routing traffic:

Aug 19 10:24:53 f220105 openvpn[2632]: Fri Aug 19 10:24:53 2022 Attempting to establish TCP connection with [AF_INET6]2600:1f18:6600:7f01:dc24:54f2:d95f:abc0:443 [nonblock]

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 TCP connection established with [AF_INET6]2600:1f18:6600:7f01:dc24:54f2:d95f:abc0:443

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 TCP_CLIENT link local: (not bound)

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 TCP_CLIENT link remote: [AF_INET6]2600:1f18:6600:7f01:dc24:54f2:d95f:abc0:443

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 TLS: Initial packet from [AF_INET6]2600:1f18:6600:7f01:dc24:54f2:d95f:abc0:443, sid=f63c5c5a 9d0382c8

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 WARNING: this configuration may cache passwords in memory -- use the auth-nocache option to prevent this

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 VERIFY OK: depth=1, C=US, ST=WA, L=Seattle, O=balena.io, OU=balenaCloud, CN=open-balena-vpn-rootCA

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 VERIFY KU OK

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 Validating certificate extended key usage

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 ++ Certificate has EKU (str) TLS Web Server Authentication, expects TLS Web Server Authentication

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 VERIFY EKU OK

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 VERIFY OK: depth=0, C=US, ST=WA, L=Seattle, O=balena.io, OU=balenaCloud, CN=vpn.balena-cloud.com

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 Control Channel: TLSv1.3, cipher TLSv1.3 TLS_AES_256_GCM_SHA384, 2048 bit RSA

Aug 19 10:24:54 f220105 openvpn[2632]: Fri Aug 19 10:24:54 2022 [vpn.balena-cloud.com] Peer Connection Initiated with [AF_INET6]2600:1f18:6600:7f01:dc24:54f2:d95f:abc0:443

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 SENT CONTROL [vpn.balena-cloud.com]: 'PUSH_REQUEST' (status=1)

...

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 GID set to openvpn

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 UID set to openvpn

Aug 19 10:24:55 f220105 openvpn[2632]: Fri Aug 19 10:24:55 2022 Initialization Sequence CompletedThe first part of the journal shows that the device has initiated contact with

the VPN backend, accepted the certificate passed to it, and then started the

initialisation of the VPN. We've cut some option stuff out, but the final lines

state Initialization Sequence Completed which is the sign the VPN is up

and running.

There are some things that can affect the VPN. As we saw when discussing NTP, an incorrect time might force the VPN to reject the server certificate, as it wouldn't be in a valid time window.

Additionally, other things that might affect the VPN include the firewalling of non-HTTPS traffic (the VPN uses port 443 as well), the inability to retrieve an initial configuration/CA certificate and Deep Packet Inspection routers that require alternative certificates (we'll go into this later).

Another stumbling block is that if there are VPN issues then this usually means the VPN isn't working, which means the device is not able to go into an 'Online' state, and thus SSHing from the balena CLI or the Dashboard is not possible. In these cases, your best hope is that there is another balena device that is on the same network, to use as a gateway to the failing device (See: 4. Accessing a Device using a Gateway Device). If every balena device on the network is failing to connect to the VPN, this usually indicates the network is being overly restrictive, which becomes a customer issue.

6.4 Firewalled Endpoints

Balena devices work on a variety of networks, but they do require the basic networking environment as listed in 6. Determining Networking Issues.

Firewalls are a sensible precaution in any network, be they personal or corporate. A large number of firewalls are built to provide freedom for devices to initiate connections to an outgoing connection on the wider Internet (where a device in the network can create a connection and receive all data from the Internet based on that connection), but to refuse any incoming connection from the Internet, unless specifically allowed.

On that note, firewalls can include blocklists and allowlists. Most industrial routers and firewalls blocklist everything by default, requiring a set of allowlist IPs and domain names where traffic can be sent/received from.

However, firewalling on some networks can be very aggressive, where without any allowlisting all outgoing and incoming traffic is blocked. Usually, what occurs is that a set list of known ports are allowed to outgoing traffic (and incoming traffic on those connections), but no other traffic is allowed. This is usually tailored by SREs/IT professionals to follow the 'normal' use of nodes on those networks. However, balena devices are sometimes put into these networks and due to the nature of their network requirements, deviate from the 'normal' usage.

Sometimes firewalls can be diagnosed very easily. If a customer has a set of devices on the same network, and they've never come online on that network, it's a fair assumption that a firewall is blocking a required port or ports, and that no traffic is able to make its way out of (or into) the network.

However, sometimes things are slightly more subtle. For example, we've demonstrated what happens when the NTP service is blocked, and the time is greatly skewed. This is sometimes because the network's firewall might be blocking any traffic from/to port 123 (NTP's default port). The same is true for SSL traffic (on port 443).

This can sometimes include traffic to a customer's cloud service. For example, imagine that all the balena requirements are met, so that the device appears to be operating normally, but a customer complains that their device seems to not be able to contact their own cloud servers. It could be that the firewall lets through all the traffic required by balena, but is blocking other arbitrary ports, which might include the ports required by a service on the device.

These are all points which a support engineer should be aware of when investigating a device that is showing abnormal behavior which might be related to a network.

There are some very simple tests that can be carried out to see if most of the network requirements are satisfied:

curlto the API (curl https://api.balena-cloud.com/ping) and VPN (curl https://cloudlink.balena-cloud.com/pingorcurl https://vpn.balena-cloud.com/ping) endpoints to see if a connection is attempted (in the latter case, you'll get an error, but shouldn't get a 'Not found' or 'Timeout' error)- Check

chronyd.serviceto see when it last updated - Ensure that DNS is working (again a

curlto the API endpoint will show if name resolution is working or not) - Ensure that the registry endpoint is not blocked. This will exhibit as the

Supervisor being unable to instruct balenaEngine to pull a release's

service images. A manual attempt at

balena pull <imageDetails>should allow you to see whether any connection is made, or whether it timeouts/ disconnects.

6.4.1 Deep Packet Inspection

Some firewalls and routers implement further restrictions on traffic, namely

that of Deep Packet Inspection (DPI). This is a system where all (or sometimes

select) network packets have their headers and payload inspected to

ensure there is nothing contained which should not be allowed in/out of the

network. This raises several issues of their own. Whilst 'clear' traffic (such

as HTTP, telnet, FTP, etc.) is easy to inspect due to their unencrypted

nature, when it comes to SSL based traffic (including HTTPS and VPN), this

becomes impossible without either the keys that were used to initiate

connections or by terminating the traffic at the DPI firewall/router itself.

Because of this, most DPI networks operate by acting as a proxy for traffic. That is, any node on the network makes connections as normal, but the connections are actually made to the DPI router, which then inspects the traffic and then opens the 'real' connection to the originally requested node on the Internet. Because it is the terminating point for both incoming and outgoing traffic it is able to inspect all the traffic passing it going both out and coming in. However, to do this usually means that nodes within the network need to install a new certificate specifically for the DPI firewall/router, as all encrypted traffic (such as SSL) ends up as being shown to have come from the DPI and not from the endpoint requested (as the DPI has repackaged the traffic).

To determine whether DPI applies to a device, the following commands may be used:

$ curl https://api.balena-cloud.com/ping

curl: (60) server certificate verification failed. CAfile: /etc/ssl/certs/ca-certificates.crt CRLfile: none

$ openssl s_client -connect api.balena-cloud.com:443

CONNECTED(00000003)

depth=1 C = IE, ST = Dublin, L = Dublin, O = Royal College Of Surgeons In Ireland, OU = IT, CN = RCSI-TLS-PROTECT

verify error:num=20:unable to get local issuer certificate

verify return:1

depth=0 CN = balena.io

verify return:1

...Compare the output of the openssl command on your laptop (where the curl

command succeeds) with the output on the device (where the curl command fails).

Completely different SSL certificate chains may be printed out, indicating that

DPI is in place.

Balena devices are able to accommodate this if it is known a DPI network is in

use, by adding the balenaRootCA property to the /mnt/boot/config.json file,

where the value is the DPI's root Certificate Authority (CA) that has been

base64 encoded. This CA certificate should be supplied by the network operator

7. Working with the config.json File

About config.json

A balenaOS image, by default, does not include any configuration information

to associate it with a fleet. When a customer downloads a provisioning

image from the Dashboard, balenaCloud injects the configuration for the specific

fleet the image is being downloaded for. Similarly, the balena CLI allows

the download of a balenaOS image for a device type (and specific version), but

requires that this image has a configuration added (usually via the use of

balena os configure) before flashing to bootable media.

Note: The config.json file is different from the config.txt file, also located in the boot partition, which is used by the Raspberry Pi to set device configuration options.

The behavior of balenaOS can be configured by editing the

config.json file. This file is located in the boot partition accepts a

range of fields to modify the behavior of the host OS.

The boot partition will be the one that shows up, usually named resin-boot.

On-device, the boot partition is mounted at /mnt/boot/. Assuming you're

still logged into your debug device, run the following:

root@f220105:~# ls -lah /mnt/boot

total 6.6M

drwxr-xr-x 6 root root 2.0K Jan 1 1970 .

drwxr-xr-x 7 root root 1.0K Mar 9 2018 ..

drwxr-xr-x 2 root root 512 Aug 19 06:23 .fseventsd

-rwxr-xr-x 1 root root 24 Jul 8 19:55 balena-image

-rwxr-xr-x 1 root root 17K Jul 8 19:55 balenaos.fingerprint

-rwxr-xr-x 1 root root 51K Jul 8 19:55 bcm2711-rpi-4-b.dtb

-rwxr-xr-x 1 root root 51K Jul 8 19:55 bcm2711-rpi-400.dtb

-rwxr-xr-x 1 root root 51K Jul 8 19:55 bcm2711-rpi-cm4.dtb

-rwxr-xr-x 1 root root 516 Jul 8 19:55 boot.scr

-rwxr-xr-x 1 root root 137 Jul 8 19:55 cmdline.txt

-rwxr-xr-x 1 root root 622 Aug 19 10:24 config.json

-rwxr-xr-x 1 root root 36K Jul 8 19:55 config.txt

-rwxr-xr-x 1 root root 2.1K Jul 8 19:55 device-type.json

-rwxr-xr-x 1 root root 0 Jul 8 19:55 extra_uEnv.txt